“What I want is to make sure that good data isn’t corrupted by bad data from a system that has no right updating that field.”

— Rob Zare

The need for a smarter sync

Every company that relies on data is facing increased challenges. They’re connecting more applications to their CRM which erodes data quality at the center, while end users and system-specific AI erode data at its edges.

To avoid things getting worse, companies need a system that:

- Understands which system has authority for which data

- Manages data where it exists without trying to store it centrally

- Optimizes for data quality, not connections

- Learns, and improves data quality over time

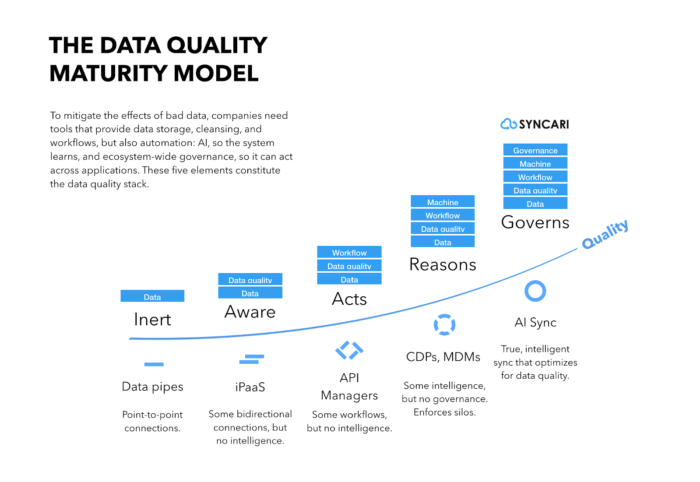

Such a system would need to play air traffic controller to maintain data quality, not just data connection. It would need a schema for understanding where data goes, but also why, and for whose benefit. It would need to understand and safeguard the purpose of the data to the business. I’ve outlined this in what I call the data quality maturity model.

The data quality maturity model

To mitigate the effects of bad data, companies need tools that provide data storage, cleansing, and workflows, but also automation: AI, so the system learns, and ecosystem-wide governance, so it can act across applications. These five elements constitute the data quality stack.

The data quality maturity model

The higher up your business ascends on the model, the more complete your data stack, and the more easily you can mitigate the effects of bad data. At the governs stage, the system managing your data ecosystem actually improves data quality over time. I call this a true AI sync. Companies at this stage enjoy greater gains for every business unit:

- Marketing campaigns outperform and build more pipeline.

- Sales teams spend more time selling and close more deals.

- Product teams build more successful products.

- Business teams reduce risk.

- Customers renew and expand.

- Companies spend less on third-party data.

We’re talking about clawing back part of the 15 to 20 percent of your company’s revenue that’s lost each year.

Today, nobody offers the complete “governs” package

No vendor offers a complete solution, or will be around long enough to. By 2023, up to two- thirds of existing iPaaS vendors will merge, be acquired, or exit the market, reports Gartner, and the iPaaS market is bifurcating between general-purpose applications and domain or vertical-specific ones.¹⁶

Today, many of these vendors are launching AI offerings. There’s Dell Boomi’s Boomi Suggest, Informatica’s CLAIRE, SAP’s integration content advisor, SnapLogic’s Iris AI, and Workato’s Workbot. But none of these features have AI at their core, and still treat connections as point-to-point, and data as just data. Some are simply SDKs for building AI that require extensive client-side developer resources to deploy. None offer code-free interfaces or tools that citizen integrators can actually take advantage of.

- Data pipes simply move data back and forth without intelligence. (Dell Boomi, MuleSoft, IBM App Connect )

- Integration platforms (iPaaS) move data back and forth with some conditional logic. Also known as cloud connectors. (Zapier, Oracle Data Manager )

- API managers simply ensure API conventions are met and documented. (MuleSoft, Microsoft Azure API Management )

- CDPs offer business logic but introduce complexity, cost, and errors. (Blueshift, Segment )

- MDMs are impractically expensive, difficult to implement, and lack business logic. (SAP Master Data Governance, IBM MDM solutions, Orchestra Networks EBX)

Short-term recommendations to improve data quality

What can a business do? In the near term, you can restructure your team to better manage the influx of data and point-to-point connections.

Create the role of API manager. An API manager’s job is to assemble an integration strategy team (IST) to invest in API planning, design, and testing, and to help citizen integrators accomplish their goals while doing less harm.

Invest in API lifecycle management. Your team needs to document its existing data flows, mappings, and configurations, and investigate each business unit’s integration needs.

Adopt AI integration systems which may help combat the damage done by system-specific AIs by automating some processes, like elastic healing and self-scaling. Some systems can make “next step” recommendations when developers are planning integration flows, and provide intelligence into how data is used throughout the company.

Don’t overcomplicate things. The last thing your data ecosystem needs are more systems. Investigate ways to have less.

In the long term, you need technology that does this for you. Such a system would have to apply data governance globally without centralizing data, feature AI at its core, and offer a code-free interface usable by citizen integrators.

And that’s exactly what we’ve reassembled the Marketo sync team to build.

About the author: Nick is a CEO, founder, and author with over 25 years of experience in tech who writes about data ecosystems, SaaS, and product development. He spent nearly seven years as EVP of Product at Marketo and is now CEO and Founder of Syncari.