Every day I see another SaaS app go ‘must-have’ viral and I wonder how long the center can hold. When the average midsize startup uses 123 SaaS tools (enterprises use thousands) and 78 percent of organizations are increasing their spend on cloud services, all I can think about are all those messy connections that lead to data quality problems. And what a drag they are on all those businesses.

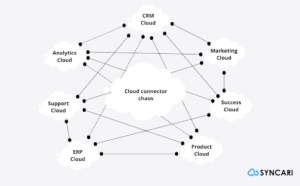

If the tangle of cloud connectors were visible, we’d see it for the cable management horror that it is. But they aren’t, so we permit them to quietly, incrementally, strangle overall data quality as we pile on more.

It’s time we take a stand—manage your connectors today because it’s about to get a whole lot worse.

No escaping Metcalfe’s Law

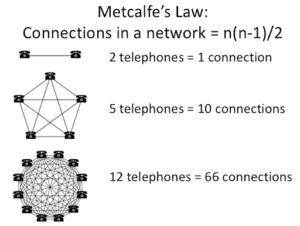

There’s a law to explain why the state of cloud connectors is so bad. Former FCC Chairman Reed Hunt once said it gives the most understanding to the workings of the internet, and it’s actually quite simple: When you add nodes to a network, the network grows exponentially more complex to manage. Consider the figure below.

When you have two nodes, there’s one connector. When you jump to five nodes, you suddenly have ten connections. And if you have 14 nodes — the average number of apps companies have hooked into their CRM — you have 91.

Companies don’t necessarily connect every app to every other app, but the principle holds: Network complexity grows with surprising rapidity.

If every connection were of high quality, this wouldn’t be so bad. But cloud connectors are a real mixed bag. Imagine a server room where every cable is a different make and model, manufactured by workmen of highly unequal skill, who don’t speak the same language. Some cables only pass data one way. Some pass data and power. Others drop every third data packet. And some are even n are wireless.

And then imagine that several generations of IT managers have each enforced their own indecipherable schema on all the labels and you’ve got a sense of how most cloud-connected environments look.

Enterprise Connector Chaos

Data quality and B2B companies

The above result is why most B2B companies are bleeding data quality. Data gets lodged in silos. Systems overwrite other systems with wrong data. Apps disagree. Data is unavailable, or outdated, or decays. Rapid system configuration makes it all worse: More people throughout the company switch cables when it suits them. All this is why:

The overhaul is nigh. Cables to anywhere, switched by anyone, aren’t helping us realize the promise of all the data we’re buying and holding: making data accurate, available, and consistent throughout the business. So here’s an idea.

Solving data quality problems: Move from data connectors to data integrity

We can’t just accept the data ecosystems we’re given. We have to plan for the ones we want — the quality we want — and that necessitates some pruning. If there isn’t an industry authority to impose standards on the market for cloud connectors, which is unlikely, every IT team needs its own central tool for data quality control. A sort of distributed system for truth that manages all other systems and avoids data quality problems.

Such a system could provide:

-

- Governance – Authority over all data systems, with bidirectional syncs to the hub

- Standardization – One global data schema for all your SaaS apps

- Conditional logic – Intelligence to maintain quality and consistency and reduce entropy

Such a system would reduce API calls for connected applications, unblock the ‘freeways’ of your data architecture to make data available where it needs to be, and keep it all accurate. This goes far beyond the promise of overhyped customer data platforms (CDPs). As Oracle’s EVP of CX Cloud put it, “The CDP market is a confusing place right now with lots of promise and hype, but very few solutions that can provide a comprehensive view into customer interactions across channels and applications.”

A new way of working with your data

What I’m talking about is a true and complete distributed data system to give IT teams their first true bird’s eye view of their data. They could turn data on and off like a tap, and run machine learning to see where it comes from, how it’s used, and optimize its proliferation throughout the business so that it can actually serve its purpose of supporting business decisions and the customer lifecycle. You can also avoid pesky, time-consuming tasks that force you to deal with data quality problems.

In terms of Metcalfe’s Law, such a central system would rip out most of our legacy connectors and reduce the complexity of the system to an analog function rather than an exponential one.

Moving from connectors to bidirectional sync

Which to me, seems a whole lot more sustainable than 123 apps connected to 123 other apps for a potential 7,503 cloud connectors — and growing.